omp compiler directives

- add

-fopenmpto theCFLAGSline in theMakefile - using integer variables

tidandncoresinmain()find out how many cores there at your disposal via this piece of code:#pragma omp parallel private(tid) { if((tid = omp_get_thread_num())==0) ncores = omp_get_num_threads(); } - set integer variable

chunkto the number of rows (height of the image to be ray traced) divided by the number of cores—this stipulates how many rows of the image each core will process—we parallelize the ray tracer by chopping up the image into horizontal bands, and having each core process each band of pixels simultaneously - insert this compiler directive immediately above the nested

forloops iterating over the image pixels (x,y):#pragma omp parallel for \ shared(...fill this in...) \ private(...fill this in...) \ schedule(static,chunk) - make sure that the nested loops write to

img, an array of (pointer to) typergb_t<unsigned char>, allocated to sizew*hand that the code inside the loops does not write tostd::coutdirectly—provide a second doubly-nested loop to ouptut the pixels after the parallelized nested loops finish

obj->getlast_hit()

- rewrite

ray_t::trace()so that it no longer needs andobject_t *last_hitargument - rewrite

model::find_closest()so that it updates thevec_t hitpoint and the normal at the point (for the plane, this will just be the plane's normal, for the sphere, this gets calculated on the fly); these variables are handled in a similar manner todist, the distance to the closest hit point from the ray's current position - rewrite all the objects'

hits()routines so that they update the hit point and normal—the objects' normal must not be altered, and nolast_hitshould be stored (this should be removed from theobject_tclass)

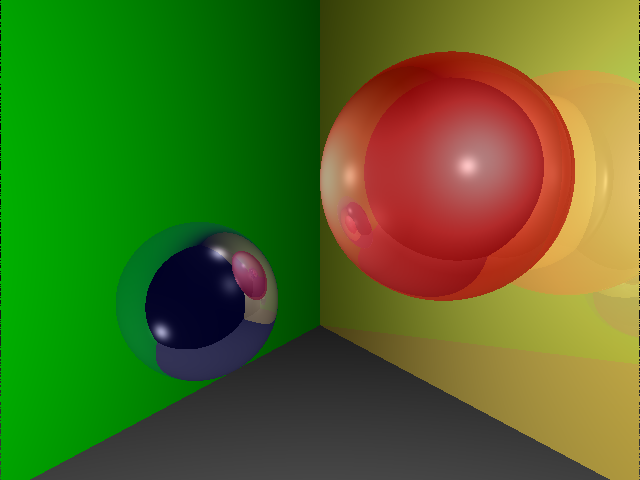

#pragma omp

directive to run the serial version (once your parallelized version

works); on my dual-core laptop, the parallel version takes 1.517

seconds, while the serial version takes 2.934 seconds, not quite

a factor of 2 reduction, but 1.9, which is noticeable. On a

quad-core machine, the speedup should be even better.